The MicaSense RedEdge 3 sensor is a small multispectral sensor package that includes five sensors covering the red, green, blue, red edge, and infrared bands. This sensor can be attached to different UASs. In this project, data from one of these sensors connected to a DJI Phantom 3 is used. The flight covered an plot of land near Fall Creek, Wisconsin. One of the benefits of this sensor is that light meters can be attached. Other attachments can also be attached. The name of the sensor references the red edge band which aids in analysis of vegetation health. This band lies between the red and infrared bands.

Collecting information from all of these bands makes multispectral analysis possible. One form of analysis which is being performed in this project is value added data analysis. This technique registers different spectral signatures and teaches these to software via samples, which then enables the user to then run more analysis which can differentiate different segments of land cover. This is however just one of many different analyses that can be run after a composite image of all of the bands is created. Other basic functions (which were also performed in this project) include viewing the invisible bands (red edge and infrared) in false color in order to aid in vegetation health observation, and performing an NDVI (normalized difference vegetation index) function on the image in order to observe vegetation health.

Methods

To begin data was processed in Pix4D. The project was given a descriptive title for its project folder (20160904_fallcreek_rededge). The images were then added using the add directories function and clicking on the folder that contained all of the images (Figure 1).

|

| Figure 1 |

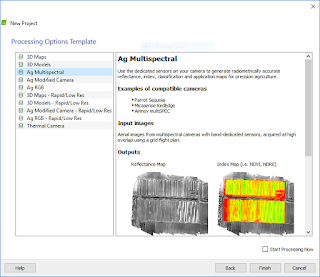

After this was complete, clicking through the prompts, the sensor and coordinate system settings were left alone. On the last prompt however, the template for the agricultural multispectral imagery was selected (Figure 2).

|

| Figure 2 |

After clicking finish, initial processing was done. After the initial processing, the processing options were changed so that options that do not normally run with the template could be run. Boxes were checked so that the processing settings were as they look in Figure 3.

|

| Figure 3 |

After running the second and third steps of processing, the resulting ray cloud was shown as seen in Figure 4. A quality report was also generated which is linked to here: https://drive.google.com/open?id=0B36dlU8PtG9pd1dvaV9JaHNyTnc

|

| Figure 4 |

The resulting data (the non-transparent images that Pix4D created in the 2_mosaic directory in the 3_dsm_ortho directory of the project folder) was brought into and ArcMap map. The TIFF files (one for each band) were then run in the Composite Bands (Data Management) tool to create a composite raster of all of the bands created. Special care had to be taken to bring these bands into the tool in the order these bands fall on the electromagnetic spectrum (blue, green, red, red edge, near infrared). This is shown in Figure 5.

|

| Figure 5 |

The resulting composite raster was then brought into an ArcMap three times to create three different layers. Each of these layers then had its symbology (bands on each channel in Figure 6) and labeling (table of contents in Figure 7) changed so that real color RGB, false color infrared, and false color red edge images could be created. These are all shown in their respective maps in the results section below.

|

| Figure 6 |

|

| Figure 7 |

After downloading the data and files included with the lesson, the tasks were followed in the ArcGIS Pro project. Instead of working with the data provided by the lesson, the data that was supplied by the instructor of this course was used. From the composite image that was created earlier in the project in ArcMap, specific bands were extracted to work with, then similar pixels in that file were grouped into segments by a tool embeded in the tasks of the ArcGIS Pro file. From here the segmented image and the original mosaic were brought into ArcMap. Here the Image Classification toolbar and the Training Sample Manager were used to create a shapefile with training samples of all of the different classifications of land (roof, road, wood, cars, vegetation, and shadows). This is shown in Figure 8. The functions needed to be used are highlighted. Customize is highlighted because to be able to use the Image Classification toolbar the spatial analyst extension must be turned on.

|

| Figure 8 |

|

| Figure 9 |

After training the classifier with the shapefile by following the instructions, the raster was classified with the resulting classifier definition file. This step was tricky because the tool would not save the raster in the geodatabase I was working in, only the folder containing it. Also once classified it was just a big pink square because it thought everything was vegetation. At this point a new training shapefile was created based on new selections, and the steps after that were run.

After this hurdle was overcome, the image was reclassified to get the simple two classification (impervious and permeable) scheme using the parameters which were given in the directions of the task window. The resulting TIFF file was then mapped in ArcMap and can be seen below in the results section.

Results and Discussion

The resulting maps are shown below.

|

| Figure 10 |

Figure 10 shows the real color imagery (3,2,1). The imagery does have stripes of color distortion and other errors (weird shapes surounding the deck). These errors can be traced back to a few missteps in the field. First of all, the color distortions of some areas can be traced back to clouds obscuring parts of the area. This could have been corrected had a calibration disc been set out and the images calibrated later on in software processing, but this step was skipped out of ignorance because this was the operators first flight with the sensor. Another contributor to these distortions is the lack of a light meter which could have been bought and attached to the sensor. The weird shapes produced can possibly be traced to insufficient overlap of images. More images could have been taken and taken closer together. Another issue that was noted that could have resulted in poor final quality was that images were taken as the DJI Phantom 3 was still climbing and these images were more difficult to tie by the software into the final mosaic because of the difference in altitude and spatial resolution.

|

| Figure 11 |

|

| Figure 12 |

|

| Figure 13 |

Conclusion

Multispectral imagery is a very versatile and valuable type of data! With a multispectral sensor such as the one used in this project combined with UAS, higher spatial resolution data than available multispectral satellite data can be collected, and at any time. This can then be processed to find permeable and impermeable surfaces as well as displayed in such ways as was done in this project (or in NDVI) to view vegetation health. After kinks with flight planning, light metering, and calibration are worked out, and GCPs are added, very highly accurate and quality data can be produced.

No comments:

Post a Comment